Just-Dub-It

Video Dubbing via Joint Audio-Visual Diffusion

Experience seamless lip synchronization and voice personality preservation across multiple languages.

A joint audio-visual model is all you need for video dubbing.

JUST-DUB-IT maintains speaker identity and precise lip synchronization across any target language,

while demonstrating robustness to complex motion and real-world dynamics.

Introduction Video

Diverse Results

JUST-DUB-IT achieves high fidelity and robust synchronization across diverse scenes.

Better than state-of-the-art

Compare our results with existing baselines across different languages and speakers.

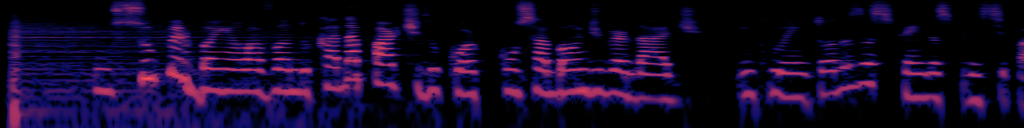

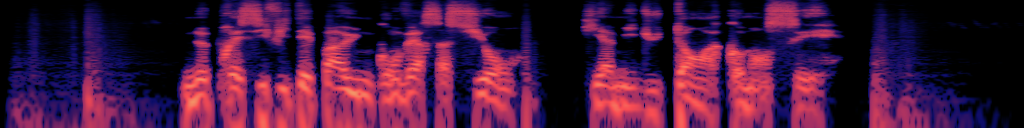

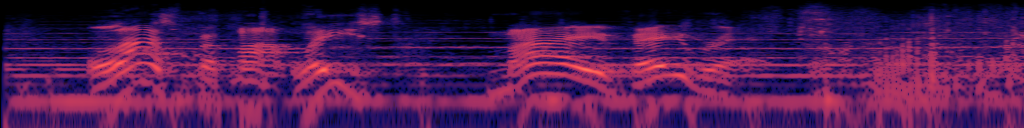

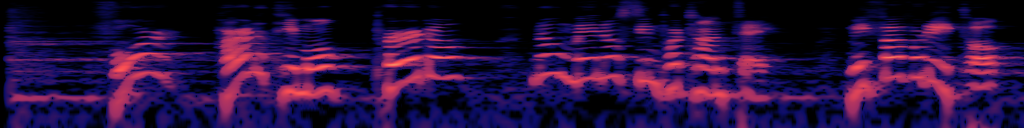

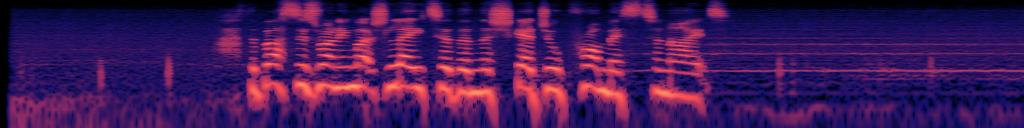

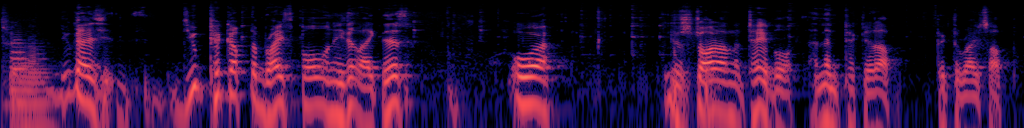

Zootopia 2 Trailer

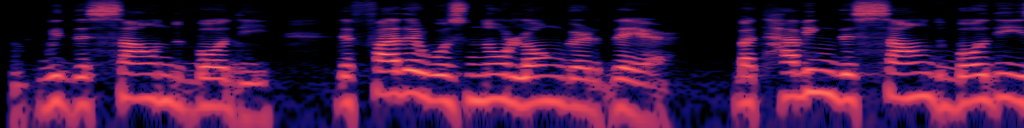

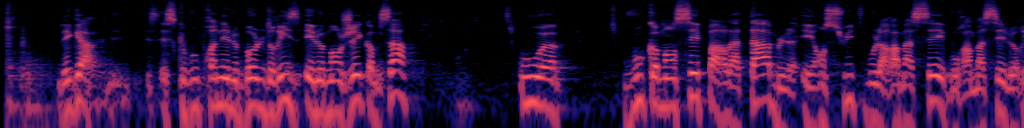

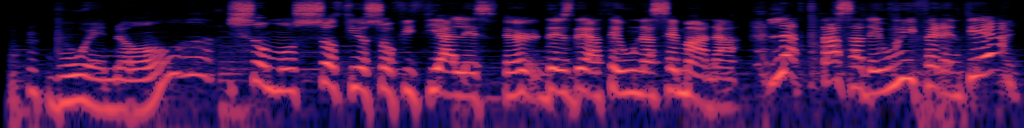

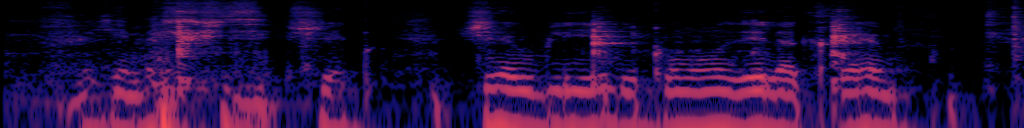

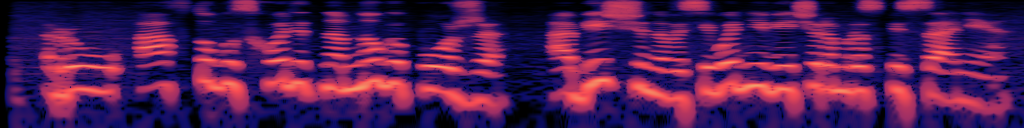

Source

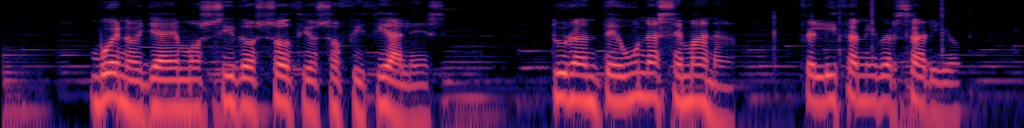

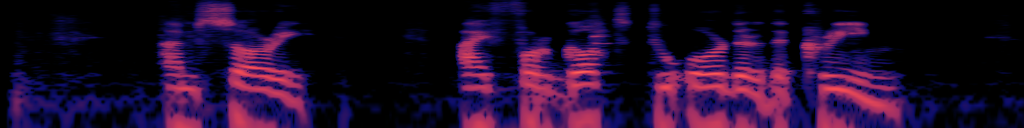

JUST-DUB-IT

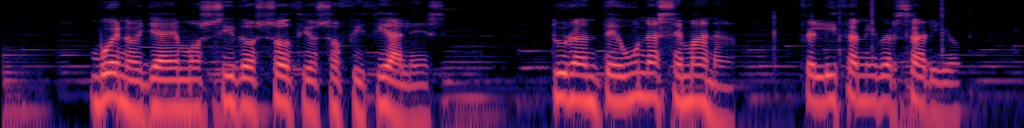

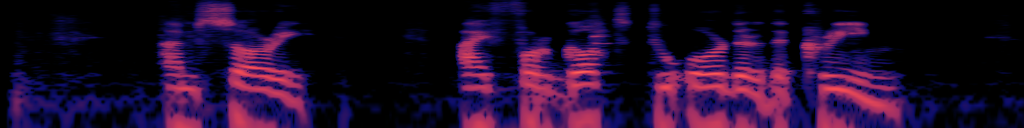

LatentSync

LatentSync corrupted for this case

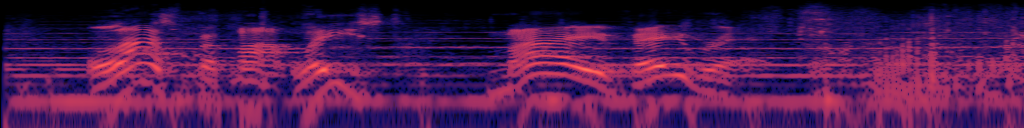

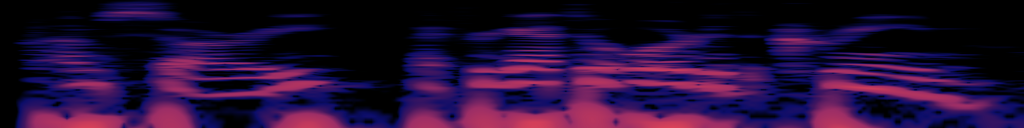

HeyGen

HeyGen fails and returned identical video with dubbed audio, which is out of sync

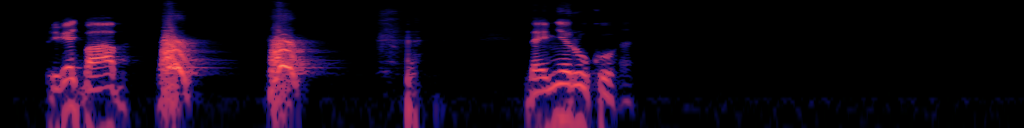

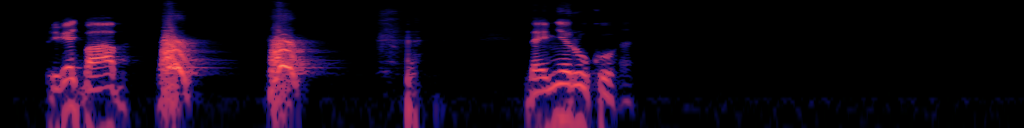

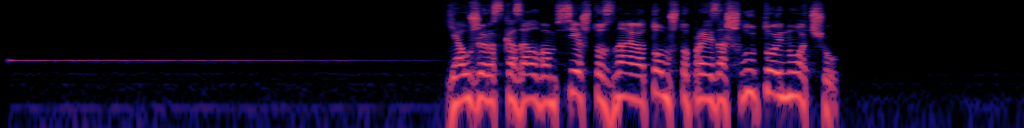

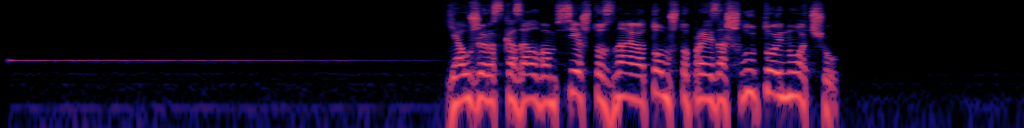

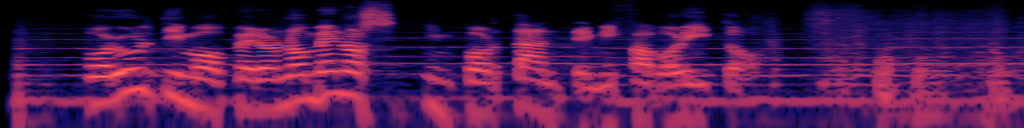

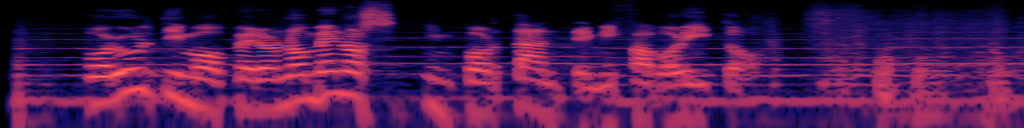

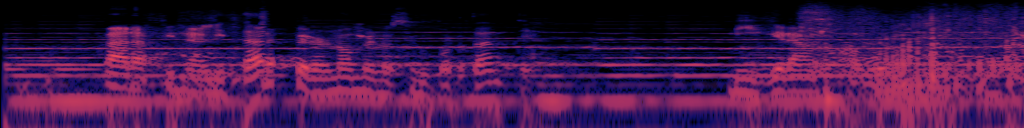

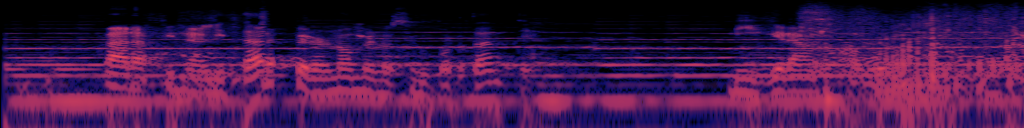

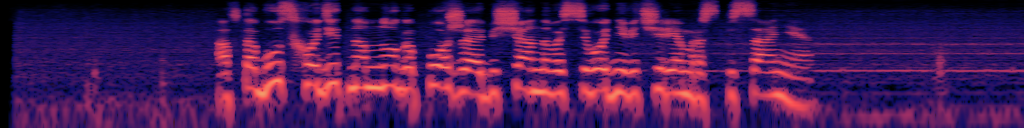

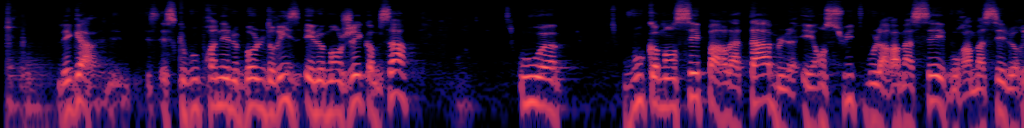

The Mask (Jim Carrey)

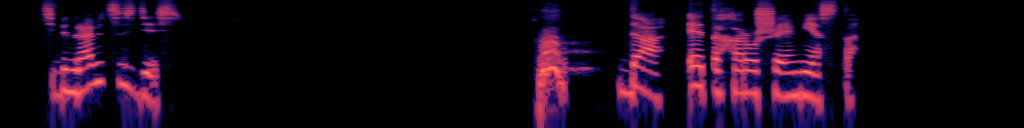

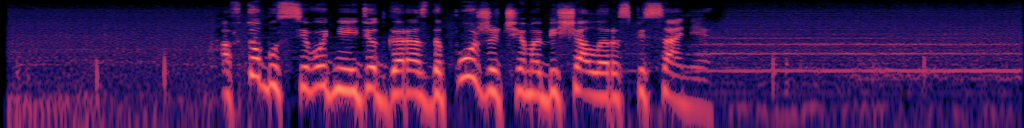

Source

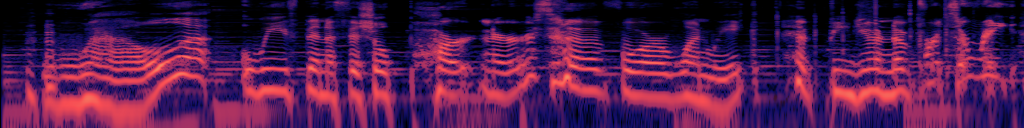

JUST-DUB-IT

LatentSync

LatentSync generates blurry video and fail to preserve the original duration.

HeyGen

HeyGen fails on this case, naively copy-pasting the original video.

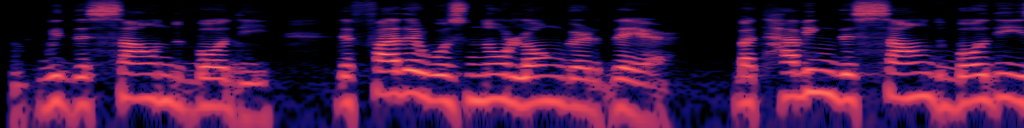

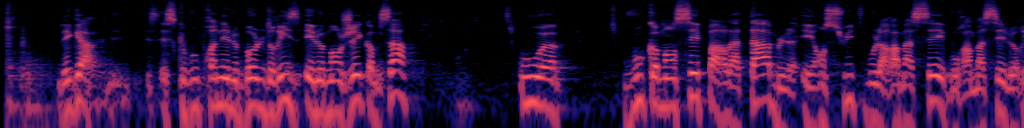

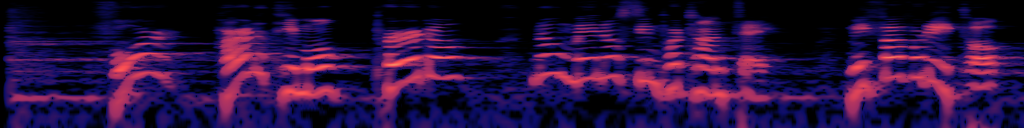

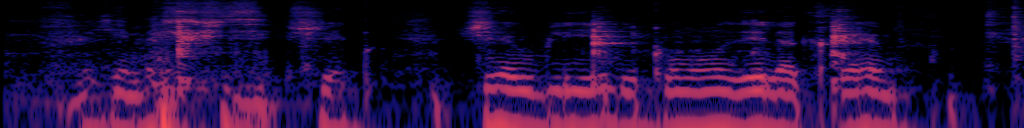

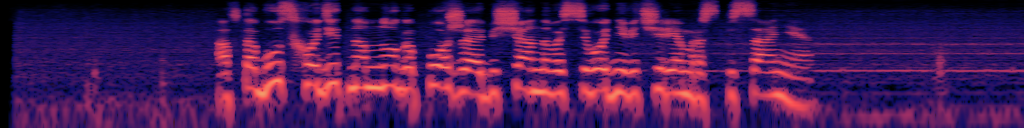

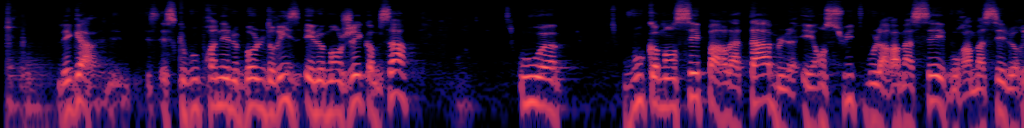

Hans Landa

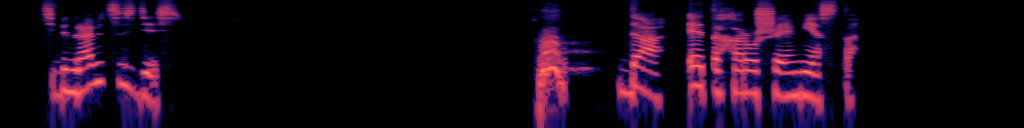

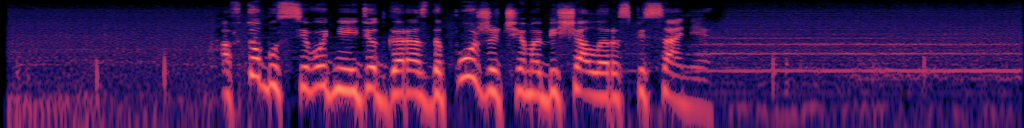

Source

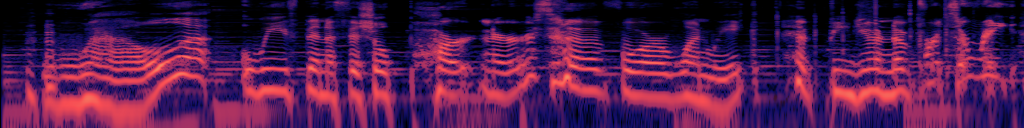

JUST-DUB-IT

LatentSync

HeyGen

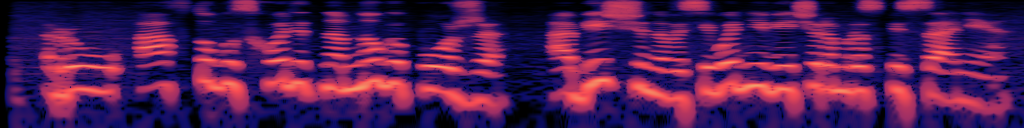

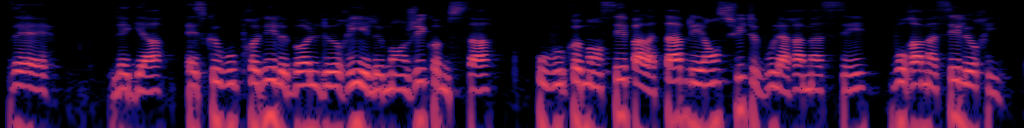

Bus Stop (Russian Dub)

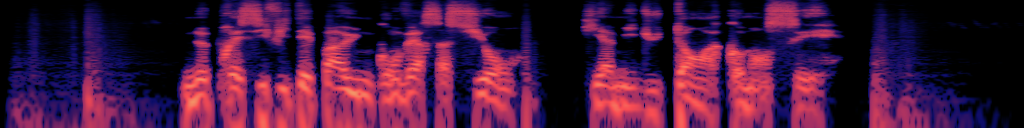

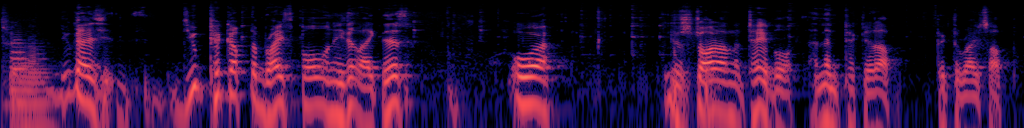

Source

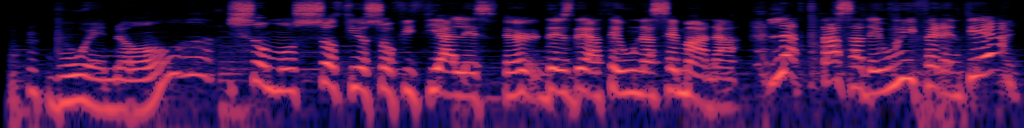

JUST-DUB-IT

LatentSync

HeyGen

Larry David at a restaurant

Source

JUST-DUB-IT

LatentSync

HeyGen

Abstract

Audio-Visual Foundation Models, which are pretrained to jointly generate sound and visual content, have recently shown an unprecedented ability to model multi-modal generation and editing, opening new opportunities for downstream tasks. Among these tasks, video dubbing could greatly benefit from such priors, yet most existing solutions still rely on complex, task-specific pipelines that struggle in real-world settings.

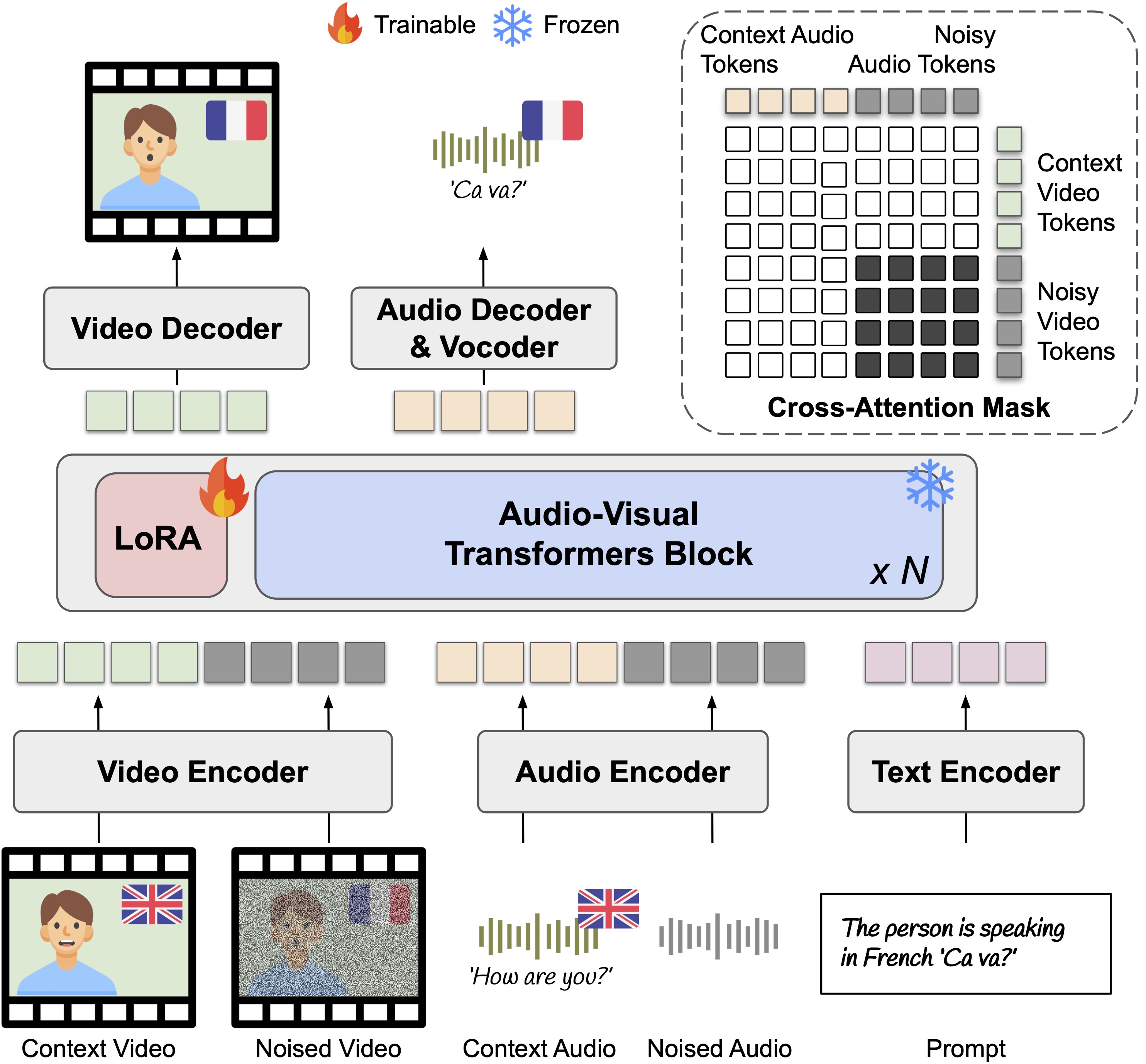

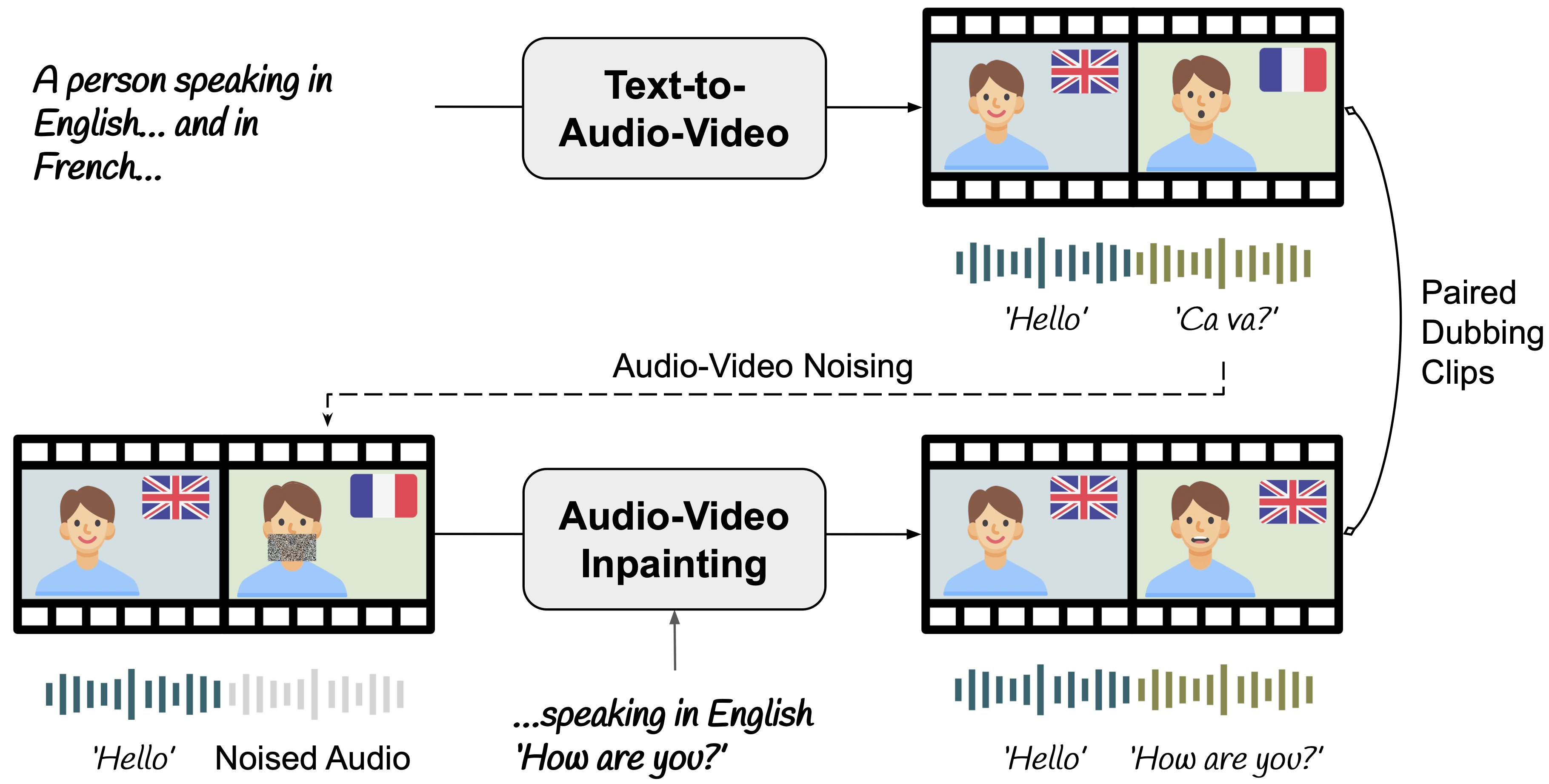

In this work, we introduce a single-model approach that adapts a foundational audio-video diffusion model for video-to-video dubbing via a lightweight LoRA. The LoRA enables the model to condition on an input audio-video while jointly generating translated audio and synchronized facial motion. To train this LoRA, we leverage the generative model itself to synthesize paired multilingual videos of the same speaker. Specifically, we generate multilingual videos with language switches within a single clip, and then inpaint the face and audio in each half to match the language of the other half.

By leveraging the rich generative prior of the audio-visual model, our approach preserves speaker identity and lip synchronization while remaining robust to complex motion and real-world dynamics. We demonstrate that our approach produces high-quality dubbed videos with improved visual fidelity, lip synchronization, and robustness compared to existing dubbing pipelines.

Method

Synthetic Data Pipeline

- · Language-Switching: Establishing ground-truth identity references via natural bilingual transitions.

- · Counterfactual Inpainting: Generating perfect multilingual pairs with identical pose/background.

- · Latent-Aware Masking: Eliminating motion leakage by computing the effective receptive field in latent space.

In-Context LoRA Training

- · Structural Anchoring: Leveraging source latents as anchors for context-aware completion.

- · Isolated Cross-Attention: Structured bias (Matrix M) to prevent cross-modal signal leakage.

- · Unified Signal Flow: Adapting joint audio-visual priors via efficient, lightweight LoRA adapters.